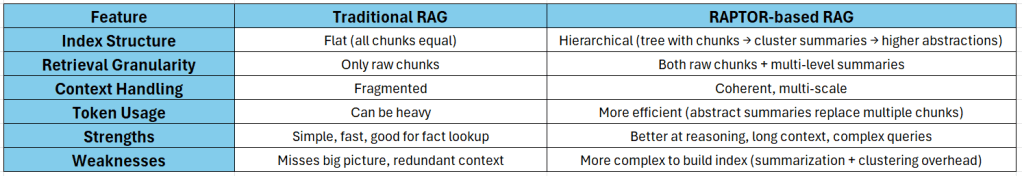

Most retrieval-augmented generation (RAG) systems today are flat. They split documents into chunks, embed those chunks, and store them in a vector database. When a query comes in, they simply fetch the top-k most similar chunks.

For simple fact lookups, this works fine. But when you look deeper at context, two big problems show up:

1️⃣ The context feels fragmented, because chunks don’t carry the bigger picture.

2️⃣ The LLM gets overloaded with too many raw chunks, wasting tokens while still missing nuance.

This is where RAPTOR (Recursive Abstractive Processing for Tree-Organized Retrieval) comes in.

Instead of treating all chunks equally, RAPTOR builds a hierarchical index.

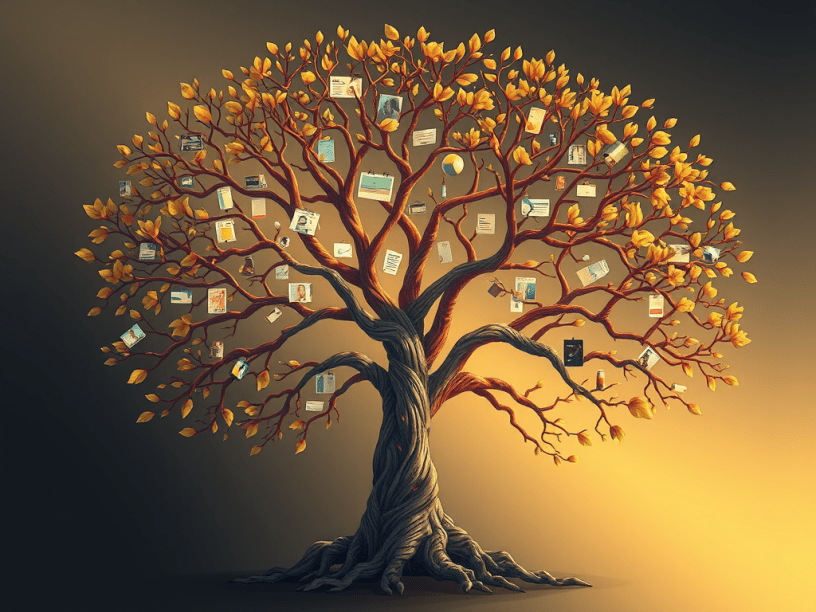

Think of it like a tree:

💠 At the leaf level, you have detailed chunks.

💠 Similar leaves are clustered together and summarized into higher-level nodes (branches).

💠 Branches then roll up into the trunk, carrying broader themes.

💠 At the very top, you have the big picture, the overall context.

At query time, RAPTOR doesn’t just pull raw chunks. It can retrieve thematic summaries or details depending on what best matches the question. This means:

💠 Better reasoning: because the system works with summaries rather than drowning in details.

💠 More efficiency: fewer tokens, since a summary can replace dozens of chunks.

💠 Closer to human thinking: we remember concepts and bring in details only when needed.

But the benefits come at a tradeoff: Extra complexity in building the index; embedding, clustering, recursive summarization. But once the tree is built, query time stays simple.

If I were to draw an analogy:

Traditional RAG is like digging through a messy desk full of sticky notes.

RAPTOR is like opening a well-organized file with chapters and summaries. It takes more work upfront, but once done, it helps you reason much faster.

Going forward, with these benefits, I see RAPTOR becoming an important cog in enterprise knowledge systems. Hierarchical retrieval feels like the natural next step for scaling RAG.