For the last few months, I’ve been writing about how agents will be the next big shift in AI. We’re no longer just talking to models and getting answers — we’re now starting to delegate tasks to them.

#Amazon’s #Nova Act is a good example of this shift.

This new AI model doesn’t just respond — it can act. It can open a browser, book a trip, submit an internal form, update your calendar, even place a lunch order — and do all of this like a human would.

What stood out for me is how Amazon is focusing on reliability. They’re not just building something flashy. They’ve worked on getting the basics right — like clicking buttons, handling dropdowns, navigating popups — with more than 90% accuracy in internal tests. These are small actions, but they’re what make or break an AI assistant in real-world use.

The fact that this will be integrated into Alexa means one thing — this could quietly enter millions of homes. And suddenly, your voice assistant might not just remind you of meetings, but also reschedule them, send follow-ups, and maybe even order that dinner you forgot about.

Of course, we still need to watch out — with more autonomy comes more risk. Privacy, safety, and reliability are all things we need to keep an eye on. But this space is moving fast.

In my view, we’ll start seeing two kinds of agents:

👉 Simple ones powered by SLMs + APIs for everyday tasks

👉 Complex, autonomous ones for multi-step workflows — like travel, HR ops, finance, and customer onboarding

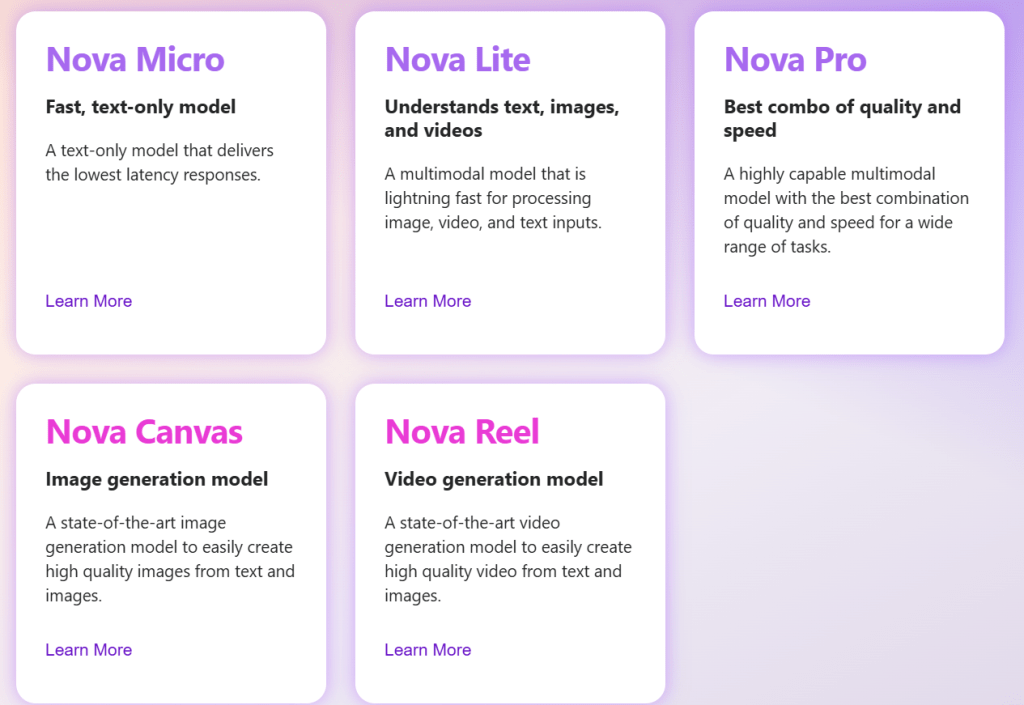

I believe that we are just getting started. Nova Act is still in research preview. But what’s exciting is how it’s being built — with builders in mind, as a flexible SDK (Developers interesting in building with Nova on Bedrock can explore the link in the comments)