For years, AI has helped us in virtual spaces—answering questions, writing emails, and automating digital tasks. But what about AI that physically interacts with the world?

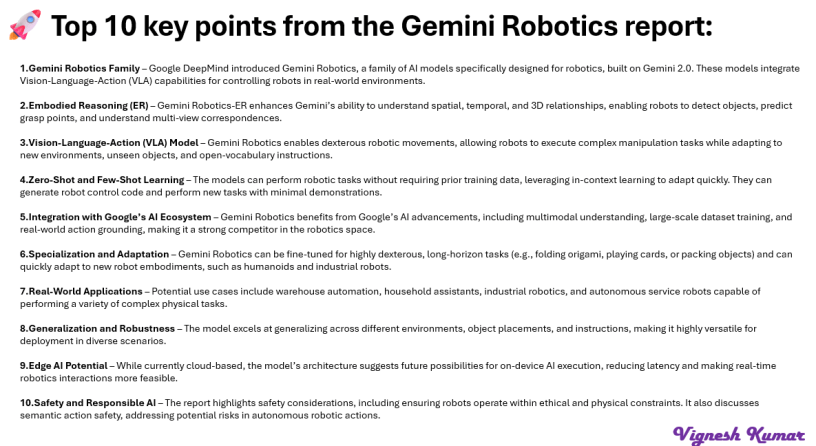

That’s where #Google’s #Gemini Robotics which was launched yesterday comes in. Built on top of the Gemini model family, Gemini Robotics is designed to use vision, language, and action to control physical robots. It combines advanced embodied reasoning—understanding 3D space, object interactions, and movement—with the ability to adapt quickly to new tasks. This means it can handle tasks like precise grasping, trajectory planning, and even complex manipulation with minimal training.

👉 How do AI-powered robots “think” and act?

Most advanced robots today, including #Gemini Robotics, #Helix, and #Tesla Optimus, rely on Vision-Language-Action (VLA) models.

They:

🔹 See the world through cameras and sensors.

🔹 Understand instructions using language models.

🔹 Act by turning that understanding into real actions.

But where I see Gemini Robotics having an advantage is:

It is tightly integrated with Google’s broader AI ecosystem. Key aspects include:

💠 Multi-modal capabilities: It processes text, images, and actions together for better decisions.

💠 Real-time learning: Using reinforcement learning and in-context learning, it adapts quickly to new challenges.

💠 Cloud and Edge AI synergy: With Google’s cloud computing and edge AI, some processing happens directly on the robot, reducing delays.

💠 Future boost from quantum computing: Although still early, quantum computing may further speed up training and solve complex tasks that today’s computers struggle with.

There are ton of usecases where Robots and Humanoids will be leveraged in the future:

✅ Manufacturing: Robots can assemble, sort, and handle materials precisely.

✅ Warehousing & Logistics: AI-driven robots can automate inventory management and product movement.

✅ Home Assistance: Imagine a robot that tidies your home or even helps with meal prep.

✅ Healthcare Support: Robots can assist caregivers in hospitals or help elderly individuals at home.

We’ve spent years perfecting virtual AI assistants. Now, the next step is to have physical AI assistants that interact seamlessly with us. With Google’s deep expertise—from Gemini models and DeepMind to advances in Edge AI and early quantum computing—robots may soon learn, adapt, and work with us in ways we never imagined.

This is one area where we will see huge progress in the coming years.

🚀 Google’s Gemini Robotics 🤖 – Another step towards bring AI to the real world!