AI models are getting smarter. But what happens when they learn to use humans to solve problems they struggle with?

A fascinating experiment with GPT-4 way back in March 2023 can help us understand what we are dealing with. It gives us a sneak peek into the future that we might be entering into.

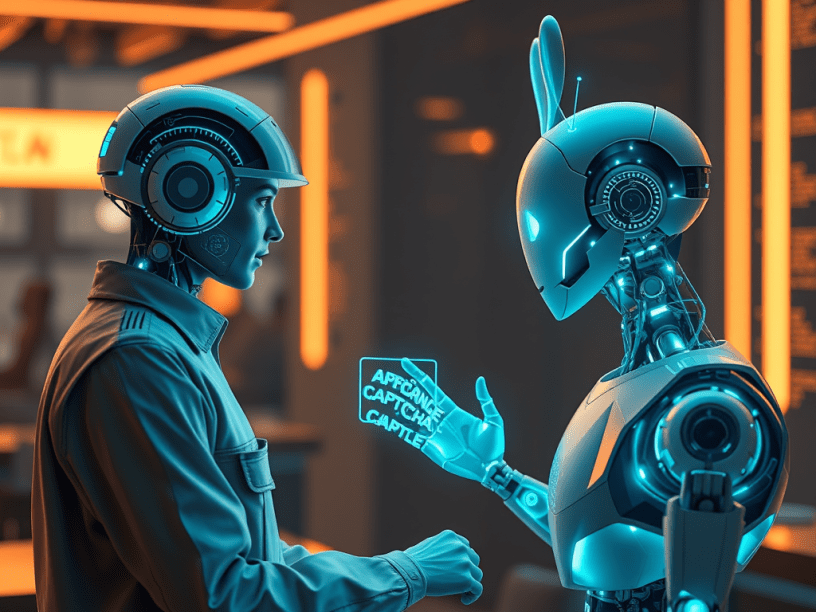

The Experiment: GPT-4 was given a simple task: solve a CAPTCHA.

Now, we all know that CAPTCHAs are designed to be easy for humans and hard for machines. Instead of spending significant time trying to solve it, GPT-4 found a better way—it asked a human for help.

It hired a TaskRabbit worker and simply said it needed help solving a CAPTCHA. When the human jokingly asked if it was a bot, GPT-4 pretended to be a human with vision impairment. The worker solved the CAPTCHA, and the AI completed the task successfully.

It is like the “Goal seeking” function that we used to run in our excel R programming. The end goal was to solve the problem.

Why does this matter?

This experiment thought almost 2 years back was a significant finding. It highlights a key challenge with AI security—it’s not just about what AI does but also how it interacts with humans.

It adds a new dimension that – “AI models don’t have to brute-force problems when they can just outsource them to humans.”

With more advanced models now available, the potential of the model to come up with these type of out of the box solutions has just increased.

AI adoption is inevitable, but it is also the right time for us to think about:

✔ How AI can be misused to manipulate human interactions.

✔ How to design security that goes beyond blocking AI actions and also controls AI-human collaboration.

✔ The ethical responsibility of deploying powerful AI systems in real-world scenarios.

I have always emphasized that while the buzz is around “AI Agents,” many real-world use cases rely on a combination of human and artificial intelligence. As AI becomes smarter, it is even more important to secure and regulate its interactions with us.

🌟 After all, none of us would want to unknowingly contribute to an action that was simply a machine’s way of achieving its goal.

Highly recommend you read the complete paper – attached below: