Day 12 saw OpenAI announce the launch of their new frontier models o3 and o3 mini and what caught my attention was that these models demonstrate advancements that might be brining us closer to #ArtificialGeneralIntelligence (AGI).

Designed to improve reasoning, it excels in solving complex problems across coding, math, and general intelligence, tasks once thought exclusive to humans.

To break it down on why I believe o3 is a milestone:

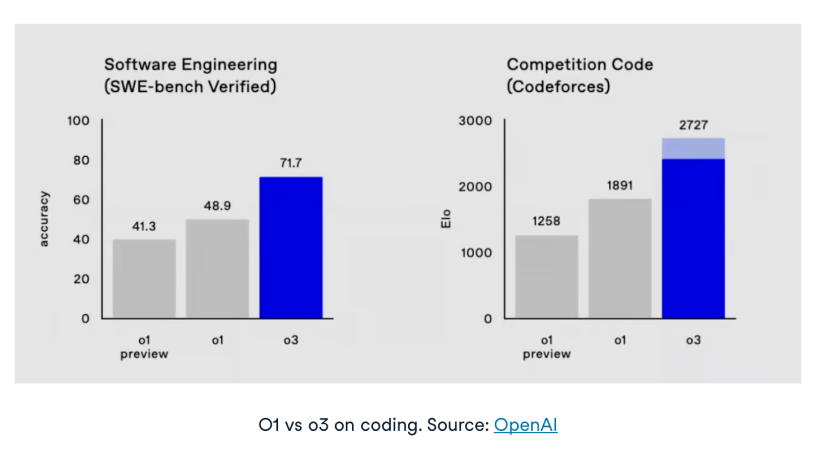

1️⃣ Reasoning Beyond Basics: o3 excels in high-complexity tasks like mathematical research and coding, with a 96.7% score on AIME 2024 (vs. o1’s 83.3%). Its reasoning capabilities also achieved 88% on the ARC AGI benchmark, surpassing the 85% human-level threshold— ⭐ a first for any AI.

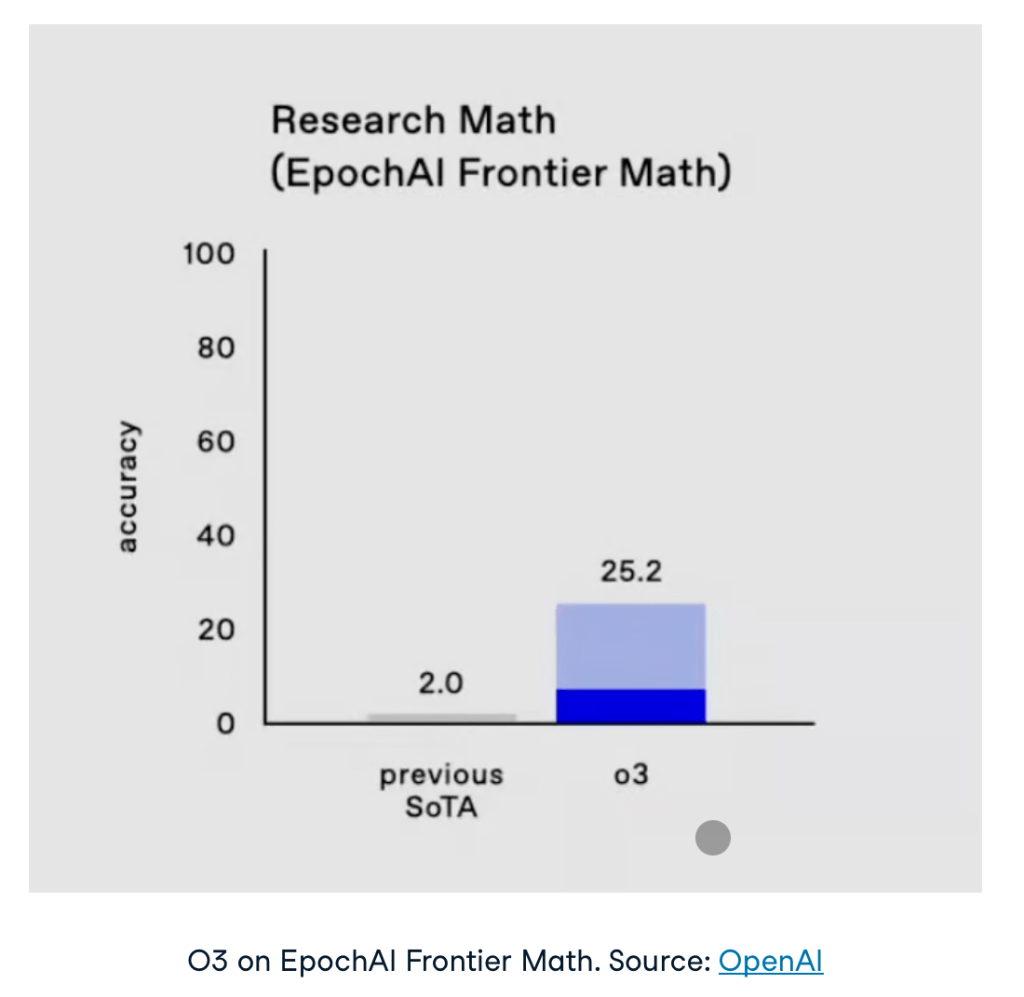

2️⃣ Generalization: On the EpochAI Frontier Math benchmark, which challenges models to solve new, unpublished problems, o3 scored 25.2%. This is a significant leap compared to typical AI scores below 2%. These results highlight o3’s ability to adapt and solve unfamiliar tasks, a key trait of AGI.

3️⃣ Collaborative Thinking: Unlike older models that relied on memorized patterns, o3 uses contextual reasoning to tackle multi-layered problems. This is evident in its exceptional performance in GPQA Diamond, solving PhD-level science questions with 87.7% accuracy (up from o1’s 78%).

4️⃣ Safety-Focused Rollout: OpenAI’s safety strategy for o3 and o3 mini includes a proactive public safety testing program for researchers. A key innovation is deliberative alignment, where the model uses real-time reasoning to evaluate prompts, going beyond static rules or datasets like RLHF or RLAIF. It generates chain-of-thought (CoT) outputs during training and inference to better understand context, intent, and hidden risks, improving safety and adaptability compared to traditional methods.

The introduction of o3 mini, a cost-effective sibling model, also makes advanced AI more accessible, ensuring diverse use cases while maintaining performance.

OpenAI’s o3 and o3 mini are currently limited to researchers through a safety testing program. o3 mini is planned for release by the end of January, offering a cost-efficient option for reasoning tasks. The full o3 release will follow, with the timeline dependent on insights gained during safety evaluations.

With these advancements, o3 isn’t just a better model— but I believe is a glimpse into the future of AI systems that think, reason, and adapt like humans.

The question that I am curious about –

▪️ what will the ecosystem look like when we attain AGI?

▪️ Do we have a plan for “how to integrate it with our workforce”?

▪️ What current problems (deemed too complex to solve) will suddenly be open to discussion?

2025 to 2030 might turn out to the most transformative/ disruptive 5 years from a technology perspective.

How prepared are we?