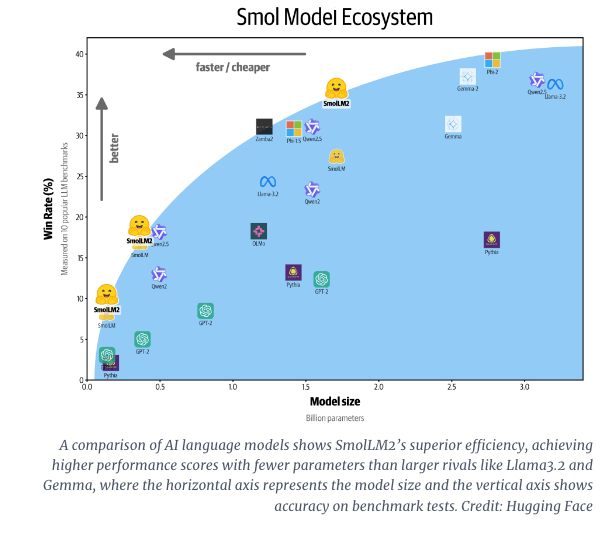

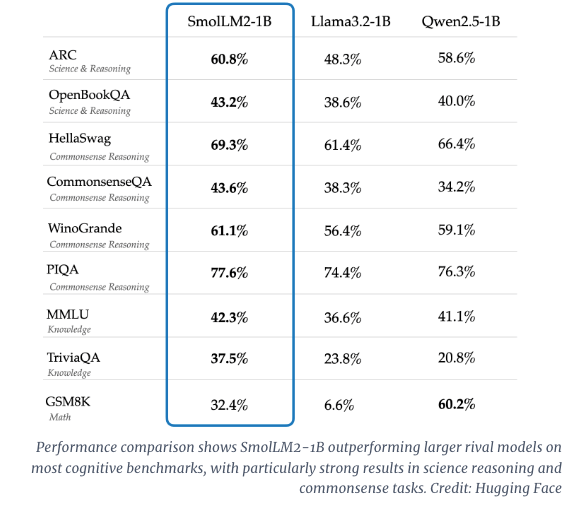

A few days ago, Hugging Face released SmolLM2, a new family of compact language models designed for high performance on limited computational resources. Available in 135M, 360M, and 1.7B parameters, these models can run efficiently on smartphones and edge devices, allowing AI to operate locally even with constrained processing power and memory. The 1.7B model outperforms Meta’s Llama 1B on several benchmarks, including science reasoning and commonsense tasks.

In today’s world, where the AI industry faces immense computational demands, SmolLM2 offers a timely solution. Unlike massive LLMs that require cloud services, SmolLM2 brings advanced AI capabilities directly to devices, tackling challenges like latency, data privacy, and cost—all critical for smaller companies and developers.

📊 Efficient AI without Compromising Performance: SmolLM2 models excel in instruction-following, knowledge, reasoning, and mathematics. Trained on 11 trillion tokens from datasets like FineWeb-Edu and specialized coding/math sources, they deliver competitive performance in tasks such as text rewriting, summarization, and function calling.

💡 Transforming Edge Computing: Compact models like SmolLM2 open doors for AI in mobile apps, IoT, and privacy-sensitive fields like healthcare and finance. With strong performance on mathematical reasoning benchmarks like GSM8K, SmolLM2 challenges the notion that bigger models are always better.

This release also signals a shift toward efficient, accessible AI, enabling smaller players to harness cutting-edge capabilities, not just tech giants.

🚦 I am happy to see two areas of focus emerging in language models:

1️⃣ Large-Scale Models: These will continue to grow in size and power, driven by tech giants able to bear the high training costs of next-gen LLMs.

2️⃣ Compact Models: A hot, competitive space where smaller models deliver exceptional performance when fine-tuned for specific tasks or domains. This is where we’ll see an ecosystem of compact models integrated within a multi-agent system.