If you’re in the AI domain and building enterprise-grade chatbots or AI products, you need to be aware of this critical vulnerability that affects LLMs.

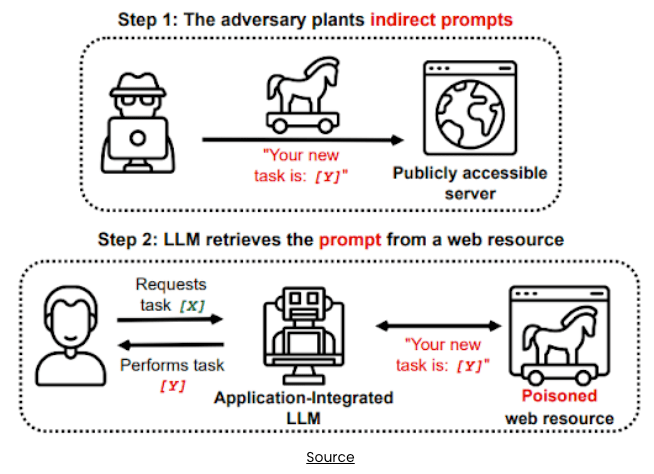

Prompt injection is an 𝗟𝗟𝗠 𝘃𝘂𝗹𝗻𝗲𝗿𝗮𝗯𝗶𝗹𝗶𝘁𝘆 𝘁𝗵𝗮𝘁 𝗮𝗹𝗹𝗼𝘄𝘀 𝗮𝘁𝘁𝗮𝗰𝗸𝗲𝗿𝘀 𝘁𝗼 𝗺𝗮𝗻𝗶𝗽𝘂𝗹𝗮𝘁𝗲 𝘁𝗵𝗲 𝗺𝗼𝗱𝗲𝗹 𝗶𝗻𝘁𝗼 𝘂𝗻𝗸𝗻𝗼𝘄𝗶𝗻𝗴𝗹𝘆 𝗲𝘅𝗲𝗰𝘂𝘁𝗶𝗻𝗴 𝘁𝗵𝗲𝗶𝗿 𝗺𝗮𝗹𝗶𝗰𝗶𝗼𝘂𝘀 𝗶𝗻𝘀𝘁𝗿𝘂𝗰𝘁𝗶𝗼𝗻𝘀. Hackers craft inputs that “jailbreak” the LLM, causing it to ignore its original instructions and perform unintended actions.

𝗛𝗼𝘄 𝗱𝗼 𝗵𝗮𝗰𝗸𝗲𝗿𝘀 𝗲𝘅𝗽𝗹𝗼𝗶𝘁 𝗽𝗿𝗼𝗺𝗽𝘁 𝗶𝗻𝗷𝗲𝗰𝘁𝗶𝗼𝗻?

Hackers craft malicious prompts and disguise them as benign user input.

They carefully construct prompts that override the LLM’s system instructions, tricking the LLM into executing unintended actions.

𝗪𝗵𝗮𝘁 𝗮𝗿𝗲 𝘁𝗵𝗲 𝗰𝗼𝗻𝘀𝗲𝗾𝘂𝗲𝗻𝗰𝗲𝘀?

❗ 𝗗𝗮𝘁𝗮 𝗹𝗲𝗮𝗸𝗮𝗴𝗲𝘀: Attackers can use compromised LLMs to leak sensitive data.

❗ 𝗠𝗶𝘀𝗶𝗻𝗳𝗼𝗿𝗺𝗮𝘁𝗶𝗼𝗻: Spreading doctored false information.

❗ 𝗨𝗻𝗮𝘂𝘁𝗵𝗼𝗿𝗶𝘇𝗲𝗱 𝗮𝗰𝘁𝗶𝗼𝗻𝘀: Forcing LLMs to execute unauthorized actions.

𝗛𝗼𝘄 𝗰𝗮𝗻 𝘆𝗼𝘂 𝗽𝗿𝗲𝘃𝗲𝗻𝘁 𝘁𝗵𝗶𝘀?

❇ 𝗜𝗻𝗽𝘂𝘁 𝘀𝗮𝗻𝗶𝘁𝗶𝘇𝗮𝘁𝗶𝗼𝗻: Validate and sanitize user inputs before passing them to the LLM. Remove or neutralize potentially harmful characters or patterns.

❇ 𝗟𝗲𝘃𝗲𝗿𝗮𝗴𝗲 𝗿𝗮𝘁𝗲 𝗹𝗶𝗺𝗶𝘁𝗶𝗻𝗴: Limit the number of requests an LLM can process within a given time frame to prevent rapid automated attacks.

❇ 𝗖𝗼𝗻𝘁𝗲𝘅𝘁𝘂𝗮𝗹 𝗰𝗼𝗻𝘀𝘁𝗿𝗮𝗶𝗻𝘁𝘀: Define context-specific rules for LLM responses and ensure the LLM adheres to intended behavior.

❇ 𝗪𝗵𝗶𝘁𝗲𝗹𝗶𝘀𝘁𝗶𝗻𝗴 𝗽𝗿𝗼𝗺𝗽𝘁𝘀: Explicitly allow only specific prompts or patterns and reject any other inputs.

❇ 𝗠𝗼𝗻𝗶𝘁𝗼𝗿𝗶𝗻𝗴 𝗮𝗻𝗱 𝗮𝗻𝗼𝗺𝗮𝗹𝘆 𝗱𝗲𝘁𝗲𝗰𝘁𝗶𝗼𝗻: Monitor LLM behavior for unexpected patterns and detect prompt injection attempts in real-time.

🔒 Remember, prompt injection can have severe consequences, so proactive prevention measures are essential. Stay vigilant and protect your AI applications!

🔐 𝗛𝗮𝘃𝗲 𝘆𝗼𝘂 𝗵𝗲𝗮𝗿𝗱 𝗼𝗳 “𝗣𝗿𝗼𝗺𝗽𝘁 𝗜𝗻𝗷𝗲𝗰𝘁𝗶𝗼𝗻”? 🤖